Virtual Threads Make Blocking Cheap (But Not Free)

Explanation, Code Examples and more.

Introduction

For years in Java backend development, blocking meant wasted threads, exhausted pools, and collapsed systems under load. With Project Loom (Java 21), that assumption is no longer true - but only if we understand what actually changed.

In this article, we will discuss why blocking was expensive, how virtual threads changed the cost model, and where blocking is still dangerous with code examples.

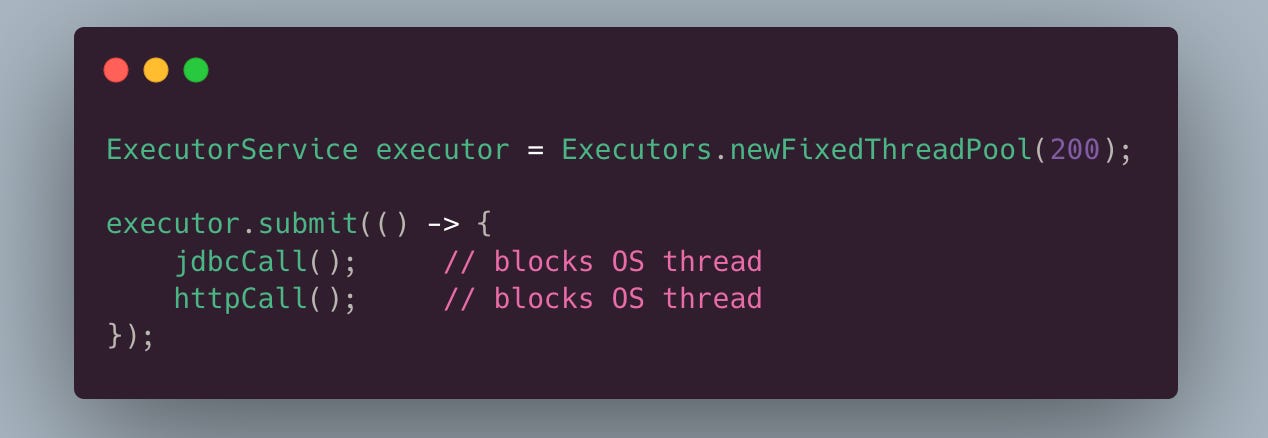

Why Blocking Was Expensive Before Loom

In the traditional Java model,

Each request occupies:

One OS thread

A large, fixed stack (~1–2MB)

Kernel scheduling resources

If the thread blocks on I/O:

The OS thread is idle

No other work can use it

Throughput collapses under high concurrency

This forced developers to:

Limit thread counts

Avoid blocking

Embrace async callbacks or reactive frameworks

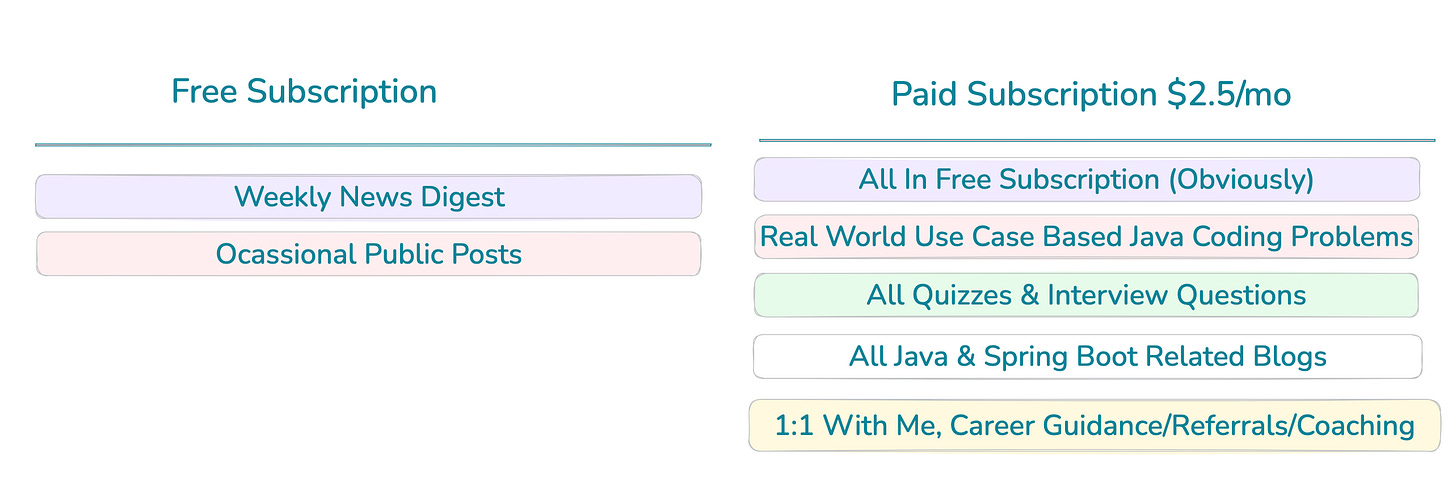

📢 Consider becoming a paid subscriber for as low as $2.5/mo (with an annual subscription) and support the work :)

Not convinced? Check out the details of the past work

Virtual Threads: What Actually Changed